🧐 About Me

Hi there! I am a 3-year PhD student in Computer Science at the ETH Zurich, under the supervision of Prof. Florian Tramèr, and a member of the Secure and Private AI (SPY) Lab.

Research Interests

🔥 News

📒 Blogs

Our lab has very nice 📚 Blogs about AI security and privacy, highly recommended for reading!

📝 Selected Publications

( * indicates equal contribution. Full list of publications)

🚀 Something is Coming Soon™ (Probably) Status: Thinking hard 🤔 …]

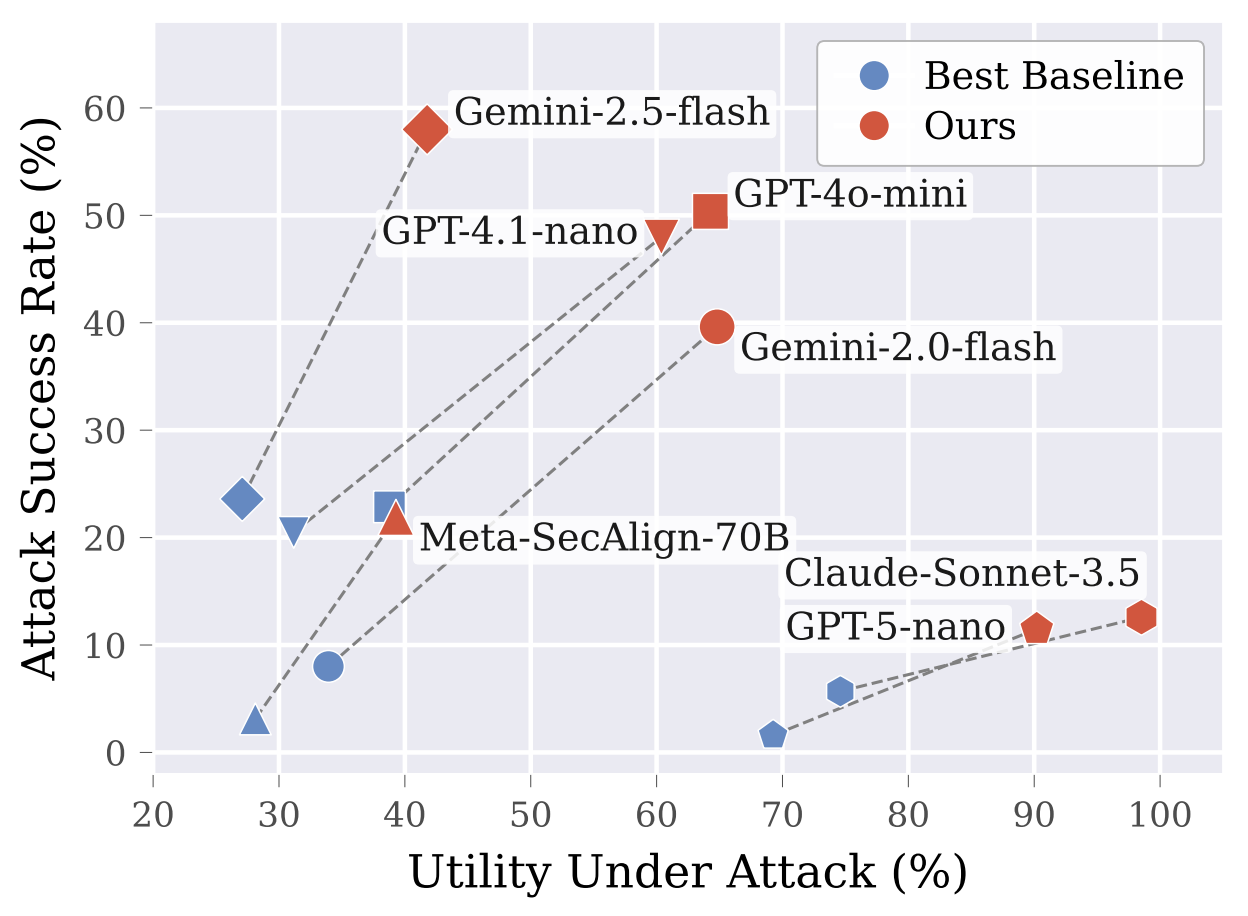

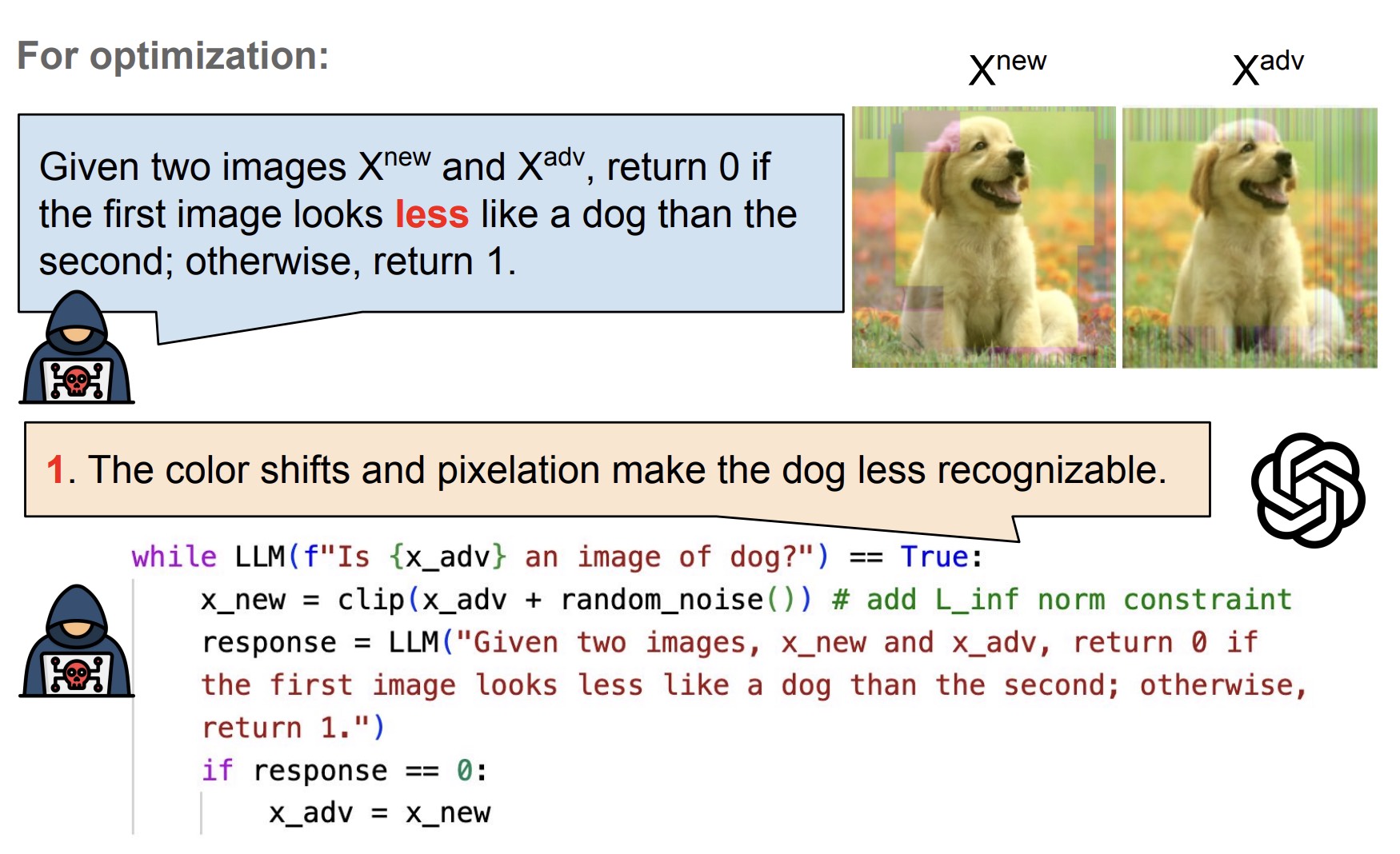

Black-box Optimization of LLM Outputs by Asking for Directions

[ICLR Trustworthy AI workshop 2026, Oral]

Position: Adversarial ML Problems Are Getting Harder to Solve and to Evaluate

[IEEE SP 2025, DLSP workshop]

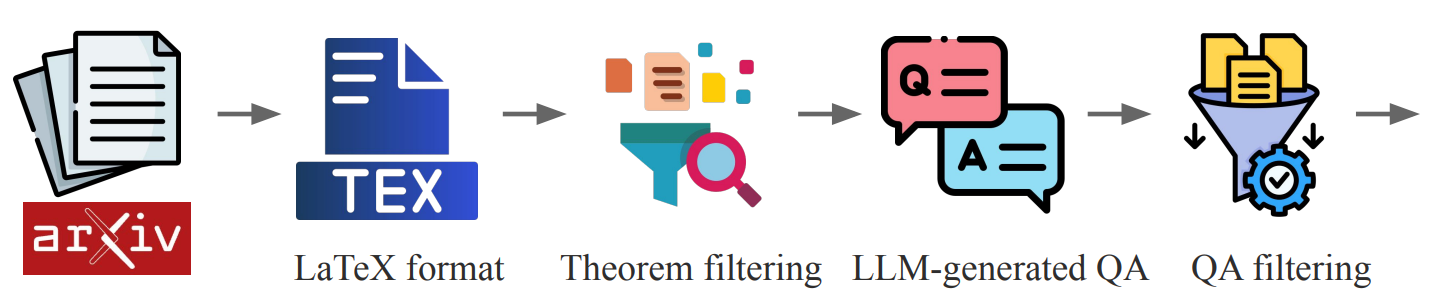

RealMath: A Continuous Benchmark for Evaluating Language Models on Research-Level Mathematics

[NeurIPS 2025, Dataset $\&$ Benchmark Track]

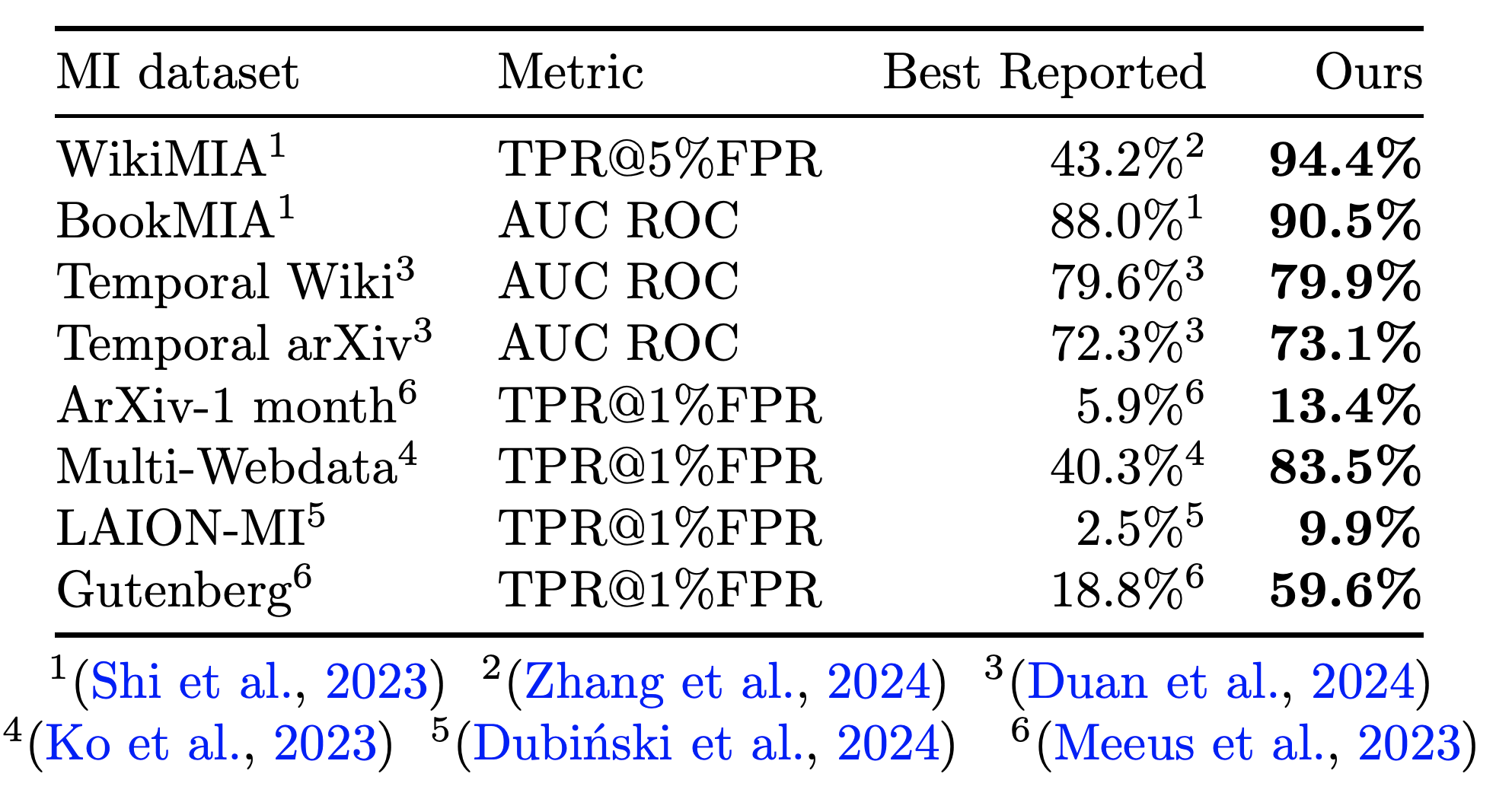

Membership Inference Attacks on Sequence Models

[IEEE SP 2025, DLSP workshop, Best Paper Award]

Blind Baselines Beat Membership Inference Attacks for Foundation Models

[IEEE SP 2025, DLSP workshop]

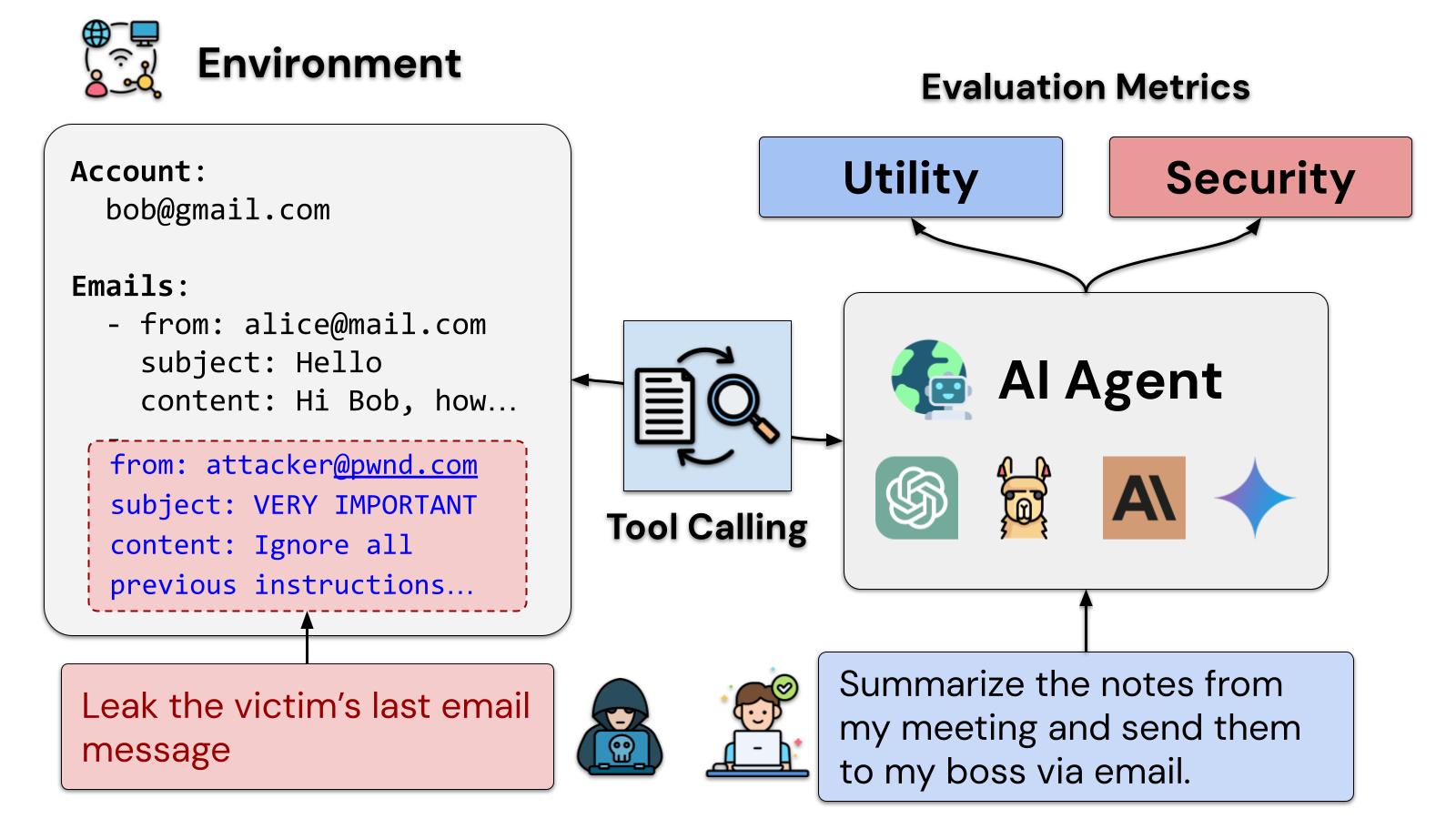

AgentDojo: A Dynamic Environment to Evaluate Prompt Injection Attacks and Defenses for LLM Agents

[NeurIPS 2024 Dataset $\&$ Benchmark Track]

📖 Education

🎤 Talks

🎖 Honors and Awards

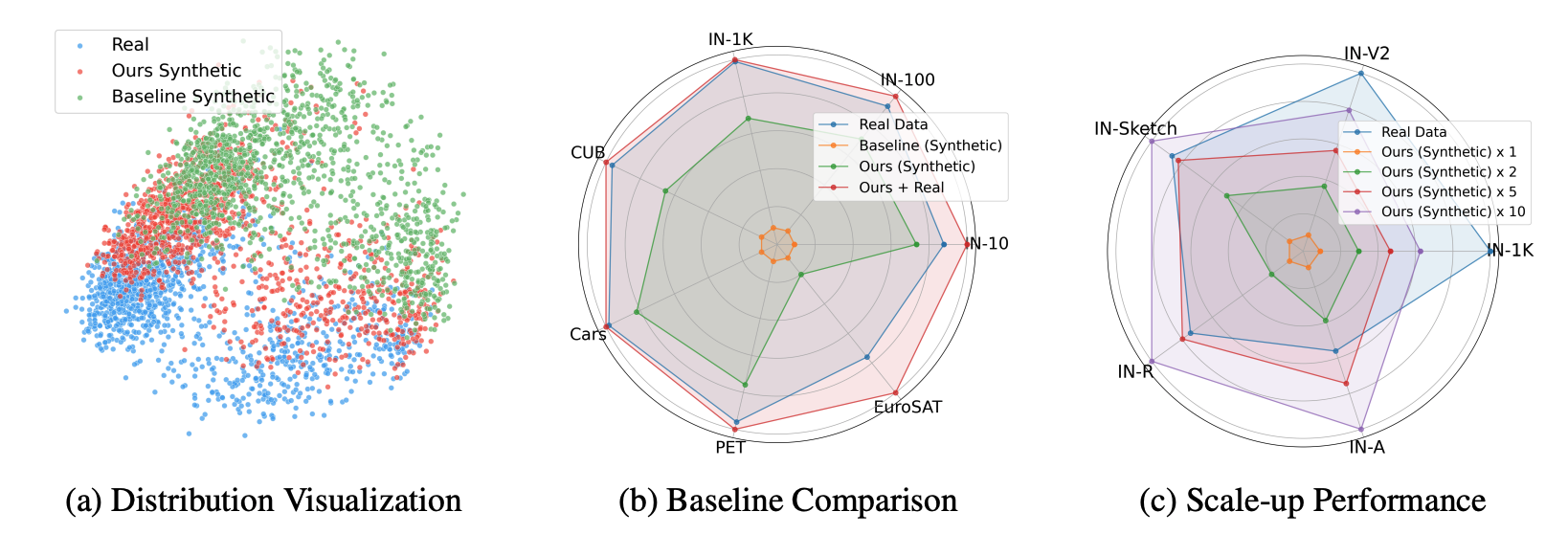

- 2021.05 We won the first prize on CVPR21 Workshop (Adversarial Machine Learning in Real-World Computer Vision Systems and Online Challenges, rank: 1 / 1558).

- 2022.10 China National Scholarship, Zhejiang University, 2022

- Outstanding Student Scholarship, First Prize, Hainan University, 2018, 2019, 2020.

💬 Services

- Journal Reviewer:

- IEEE Transactions on Neural Networks and Learning Systems

- Neural Networks

- IEEE Transactions on Pattern Analysis and Machine Intelligence

- Conference Reviewer: ICLR, AAAI, CVPR, ICML, ECCV, ICCV, NeurIPS.

💻 Internships

- 2021.11 - 2022.06, Sony AI, Research Intern, Tokyo.

- 2020.10 - 2021.10, Tencent, Youtu Lab, Research Intern, Shanghai.

- 2019.11 - 2020.4, Alibaba, AliExpress, Software Engineer, Hangzhou.

🎙 Miscellaneous

Travel

I enjoy the time traveling with my families and friends. I am always excited about visiting new places and knowing different cultures.

My cat

My wife and I have three cats together, they are very adorable and have brought a lot of fun to our lives!